Developing Secure Apps with Artificial Intelligence : A Comprehensive Guide

Developing secure apps with artificial intelligence is paramount in today’s digital landscape. As AI becomes increasingly integrated into applications, the need to safeguard against emerging threats and vulnerabilities is crucial. This article provides a comprehensive guide to understanding and implementing secure AI development practices.

Understanding the Intersection of AI and Application Security

The integration of artificial intelligence into applications offers numerous benefits, from enhanced user experiences to streamlined processes. However, it also introduces new security challenges. Traditional security measures may not be sufficient to address the unique risks associated with AI-powered applications. It’s crucial to understand how AI impacts application security and what steps can be taken to mitigate potential threats. This section will cover the core principles of AI security and its relation to general application security practices. This includes understanding the AI lifecycle and the potential vulnerabilities introduced at each stage.

The Unique Security Risks of AI Applications

AI applications are susceptible to a range of specific security risks, including:

- Data Poisoning: Attackers can manipulate training data to compromise the AI model’s accuracy and reliability.

- Model Inversion: Attackers attempt to reconstruct sensitive information from the AI model itself.

- Adversarial Attacks: Carefully crafted inputs are designed to fool the AI model and cause it to make incorrect predictions.

- Evasion Attacks: Similar to adversarial attacks, but focused on bypassing security mechanisms.

- AI Model Theft: Unauthorized copying or stealing of an AI model, which can be used for malicious purposes or sold to competitors.

These risks highlight the need for specialized security strategies tailored to AI-powered applications.

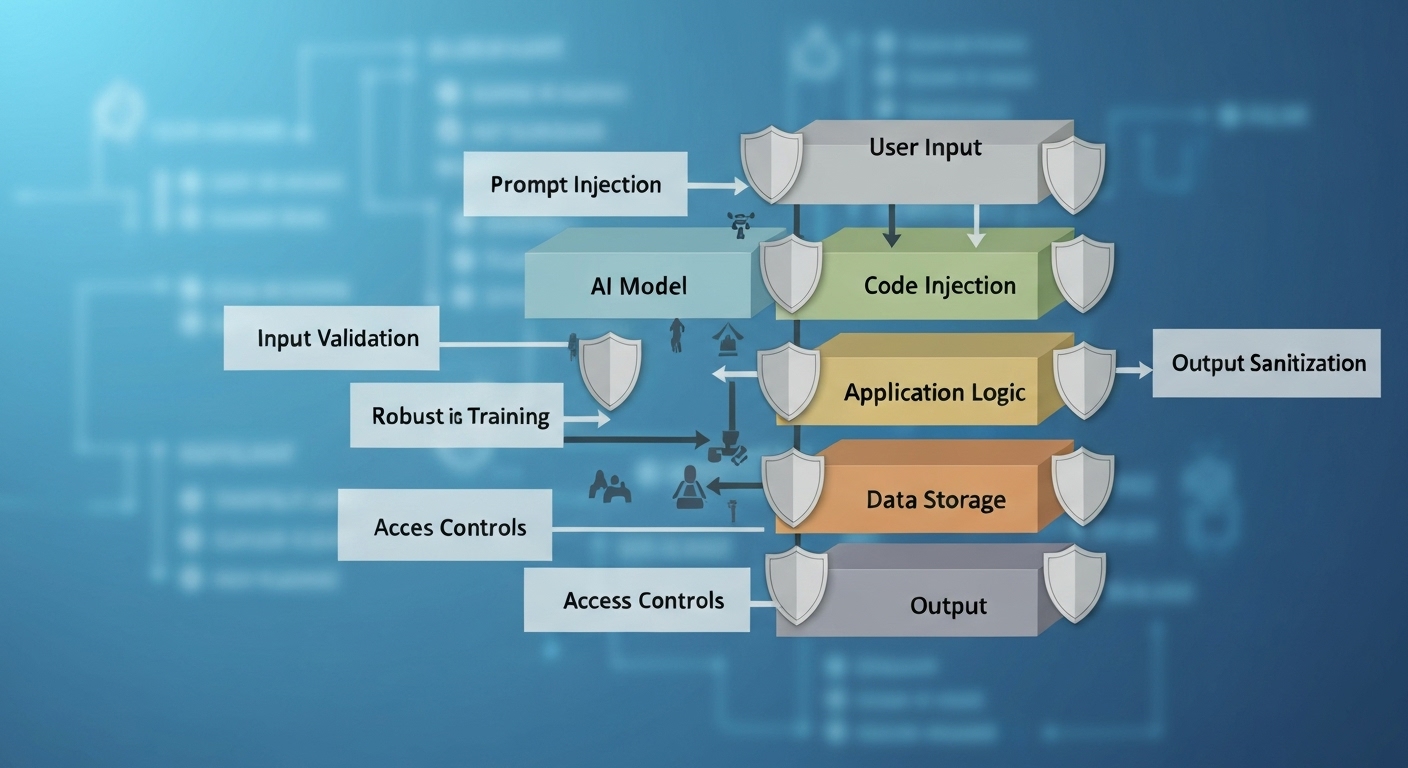

Best Practices for Developing Secure Apps with Artificial Intelligence

To effectively mitigate the risks associated with AI-powered applications, developers should adopt a proactive and comprehensive approach to security. Here are some essential best practices:

Secure AI Development Lifecycle

Implement a secure AI development lifecycle that incorporates security considerations at every stage, from data collection and model training to deployment and monitoring. This includes:

- Data Security: Protecting the confidentiality, integrity, and availability of training data.

- Model Security: Implementing measures to prevent model tampering and theft.

- Deployment Security: Securing the infrastructure and environment in which the AI model is deployed.

- Monitoring and Auditing: Continuously monitoring the AI model’s performance and behavior to detect anomalies and potential security breaches.

Ensure that all data used for training is properly sanitized and validated to prevent data poisoning attacks.

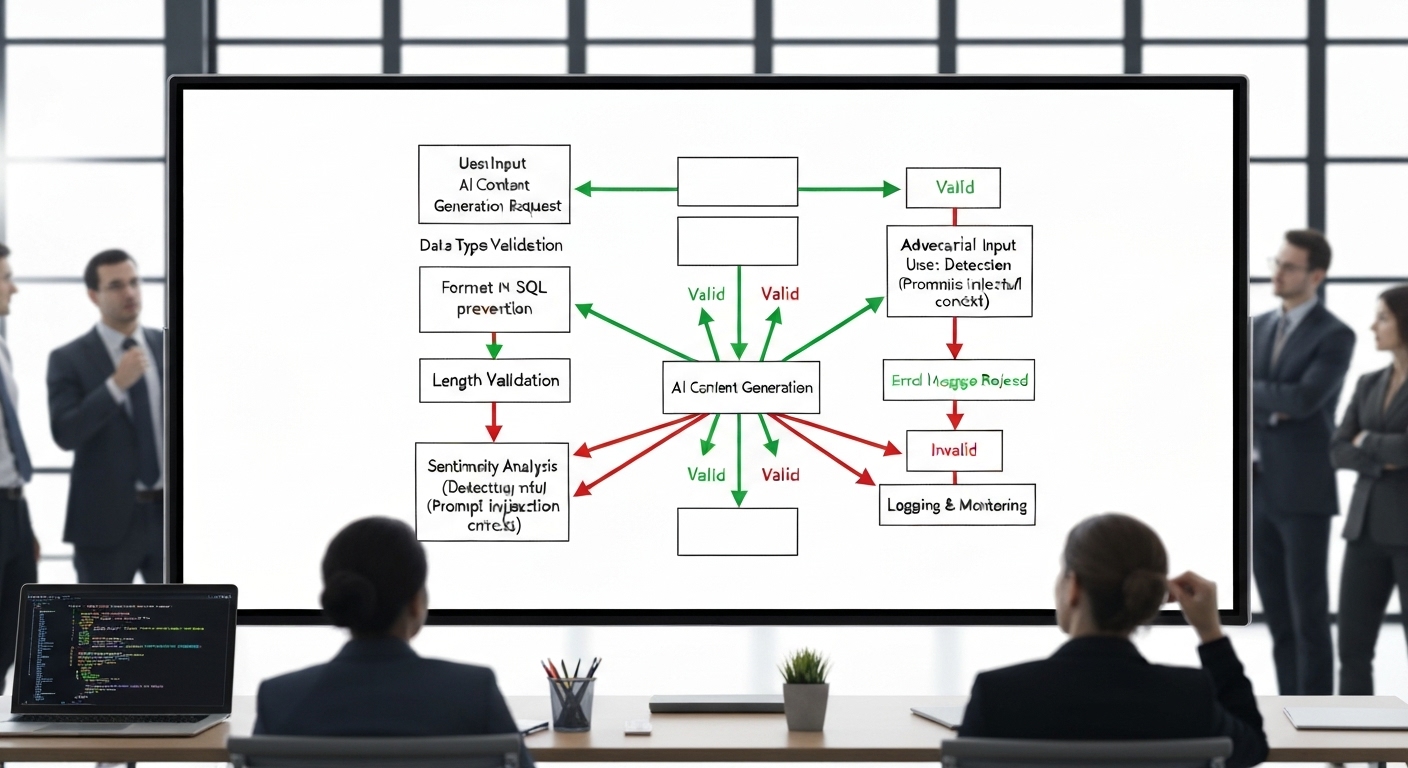

Robust Input Validation and Sanitization

Implement rigorous input validation and sanitization techniques to prevent adversarial attacks. This includes:

- Input Filtering: Filtering out potentially malicious or invalid inputs.

- Input Normalization: Normalizing inputs to a consistent format to prevent manipulation.

- Anomaly Detection: Using anomaly detection techniques to identify unusual or suspicious inputs.

By validating and sanitizing inputs, you can significantly reduce the risk of adversarial attacks and ensure the integrity of the AI model’s predictions.

Regular Vulnerability Assessment and Penetration Testing

Conduct regular vulnerability assessments and penetration testing to identify potential security weaknesses in your AI-powered applications. This includes:

- Static Analysis: Analyzing the source code of the AI model for potential vulnerabilities.

- Dynamic Analysis: Testing the AI model’s behavior in a live environment to identify runtime vulnerabilities.

- Penetration Testing: Simulating real-world attacks to assess the effectiveness of security controls.

Vulnerability assessments and penetration testing can help you identify and address security weaknesses before they can be exploited by attackers. Tools for vulnerability assessment AI are increasingly sophisticated.

Implementing Access Controls and Authentication Mechanisms

Implement strong access controls and authentication mechanisms to protect sensitive data and prevent unauthorized access to AI models. This includes:

- Role-Based Access Control (RBAC): Assigning users specific roles and permissions based on their responsibilities.

- Multi-Factor Authentication (MFA): Requiring users to provide multiple forms of authentication before granting access.

- Least Privilege Principle: Granting users only the minimum level of access necessary to perform their duties.

Proper access controls and authentication mechanisms are essential for preventing data breaches and ensuring the confidentiality of sensitive information.

Utilizing AI-Powered Security Tools

Leverage AI-powered security tools to enhance your application security posture. These tools can help you automate security tasks, detect threats, and respond to incidents more effectively. Examples include:

- AI-Powered Threat Detection: Using AI to identify and respond to security threats in real-time.

- AI-Driven Vulnerability Assessment: Automating the process of identifying and assessing vulnerabilities in applications.

- AI-Based Security Automation: Automating security tasks such as patching, configuration management, and incident response.

These tools enhance security automation and greatly improves security.

The Role of Machine Learning Security in Secure App Development

Machine learning (ML) security is a critical aspect of developing secure apps with artificial intelligence. It focuses specifically on protecting ML models from various attacks and vulnerabilities. This includes safeguarding the model’s integrity, confidentiality, and availability. Machine Learning security encompasses techniques and strategies to detect and prevent adversarial attacks, data poisoning, model inversion, and other threats targeting ML models.

Protecting Against Data Poisoning Attacks

Data poisoning attacks can significantly degrade the performance and accuracy of ML models. To protect against these attacks, it is crucial to implement robust data validation and sanitization techniques. This includes:

- Data Validation: Verifying the integrity and accuracy of training data.

- Data Sanitization: Removing or masking sensitive information from training data.

- Anomaly Detection: Identifying and removing anomalous data points that may be indicative of a poisoning attack.

By implementing these measures, you can reduce the risk of data poisoning and ensure the reliability of your ML models.

Defending Against Adversarial Attacks

Adversarial attacks involve crafting malicious inputs designed to fool ML models. Defending against these attacks requires a multi-faceted approach, including:

- Adversarial Training: Training the ML model on adversarial examples to improve its robustness.

- Input Sanitization: Filtering out potentially malicious inputs before they are fed into the ML model.

- Model Hardening: Implementing techniques to make the ML model more resilient to adversarial attacks.

Adversarial training is an essential technique for improving the robustness of ML models against adversarial attacks. Secure AI development requires thinking about adversarial attacks upfront.

Addressing Model Inversion Attacks

Model inversion attacks aim to extract sensitive information from ML models. To mitigate this risk, it is crucial to:

- Limit Model Access: Restricting access to the ML model to authorized personnel only.

- Differential Privacy: Adding noise to the model’s outputs to protect sensitive information.

- Model Obfuscation: Obfuscating the model’s architecture to make it more difficult to reverse engineer.

By implementing these measures, you can reduce the risk of model inversion attacks and protect sensitive information.

AI Driven Security: Automating Security Tasks

AI driven security is transforming the landscape of application security by automating security tasks and improving threat detection capabilities. AI-powered tools can analyze vast amounts of data, identify patterns, and respond to threats more quickly and effectively than traditional methods. AI in app security is increasingly important.

Automated Vulnerability Assessment

AI can automate the process of vulnerability assessment by scanning applications for known vulnerabilities and identifying potential security weaknesses. This can significantly reduce the time and effort required to identify and remediate vulnerabilities.

Real-Time Threat Detection

AI-powered threat detection systems can analyze network traffic, system logs, and other data sources to identify and respond to security threats in real-time. These systems can detect anomalies and suspicious activities that may indicate a security breach.

Incident Response Automation

AI can automate incident response by analyzing security alerts, identifying the root cause of incidents, and taking automated actions to contain and remediate the threats. This can significantly reduce the impact of security incidents.

Conclusion

Developing secure apps with artificial intelligence requires a comprehensive and proactive approach to security. By implementing the best practices outlined in this article, developers can mitigate the risks associated with AI-powered applications and ensure the confidentiality, integrity, and availability of their systems. As AI continues to evolve, it is crucial to stay informed about emerging security threats and adapt security strategies accordingly.

Visit NIST for more information on cybersecurity standards and guidelines.

Learn more about application development at flashs.cloud.

HOTLINE

+84372 005 899