Securely Sharing Data for Machine Learning : Methods & Best Practices

Securely sharing data for machine learning is crucial for fostering innovation while protecting sensitive information. The rise of collaborative AI projects necessitates robust methods for sharing datasets without compromising privacy or security. This article explores various techniques and best practices for achieving this balance.

The Importance of Secure Data Sharing in Machine Learning

Machine learning models thrive on data. However, much of the data needed for training these models is sensitive, containing personally identifiable information (PII), financial records, or proprietary business data. Sharing this data carelessly can lead to severe consequences, including privacy breaches, regulatory violations, and reputational damage. Securely sharing data for machine learning allows organizations to collaborate, improve model accuracy, and accelerate AI development without sacrificing data security.

Challenges in Data Sharing

Several challenges complicate the process of sharing data securely. These include:

- Data Privacy Regulations: Compliance with regulations like GDPR and CCPA requires careful consideration of data anonymization and usage.

- Data Security Risks: Protecting data from unauthorized access, modification, or deletion is paramount.

- Maintaining Data Utility: Secure sharing methods must preserve the data’s usefulness for machine learning tasks.

- Scalability: The chosen methods must be scalable to handle large datasets and numerous participants.

- Trust and Governance: Establishing trust between parties and implementing clear data governance policies are essential.

Techniques for Securely Sharing Data for Machine Learning

Various techniques can be employed to securely sharing data for machine learning. Here’s an overview of some of the most prominent approaches:

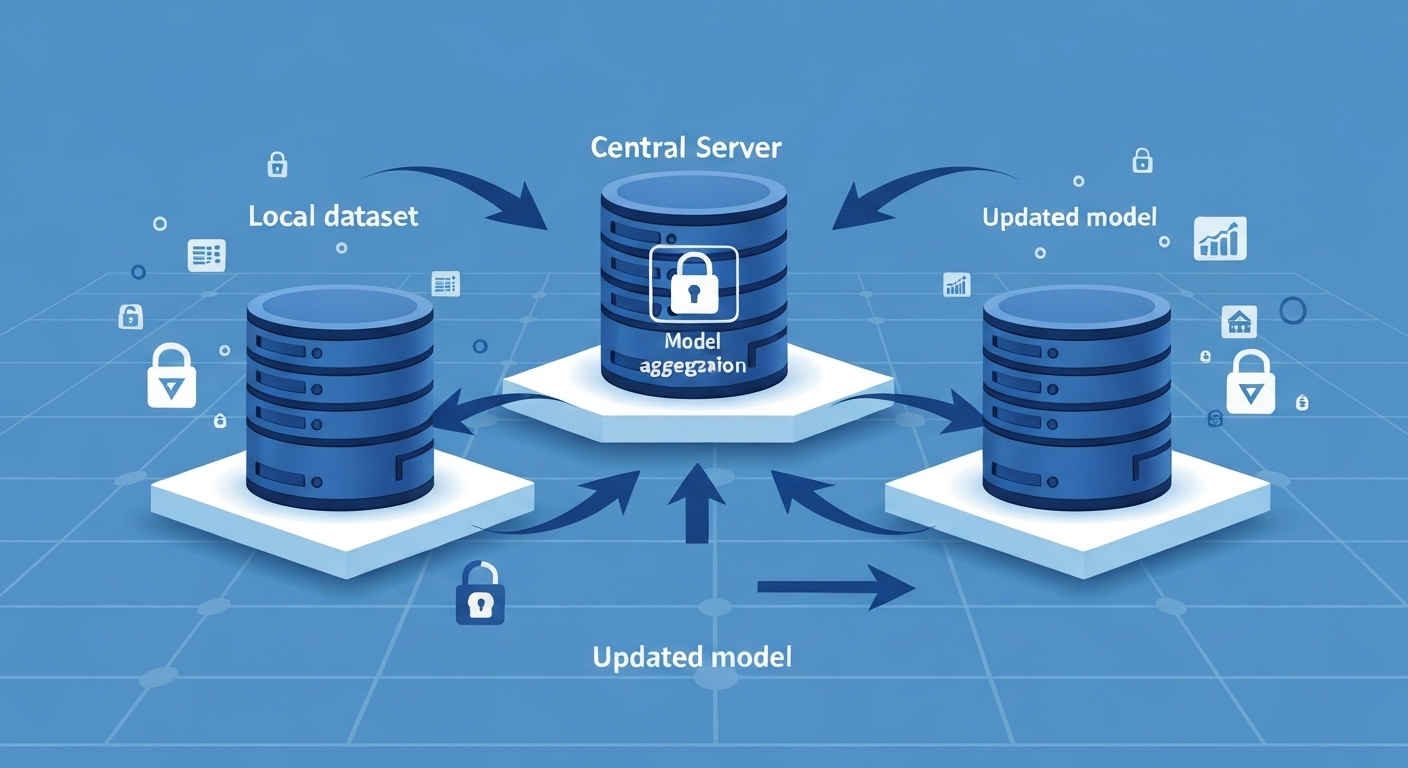

Federated Learning

Federated learning is a decentralized approach where models are trained on local devices or servers without directly sharing the raw data. Instead, only model updates are shared with a central server, which aggregates these updates to improve the global model. This minimizes the risk of exposing sensitive data. Federated learning promotes data privacy as a core principle.

Differential Privacy

Differential privacy adds noise to the data or model outputs to protect individual privacy. This ensures that the presence or absence of any single data point does not significantly affect the outcome of the analysis. Differential privacy provides a quantifiable guarantee of privacy, making it a valuable tool for data anonymization.

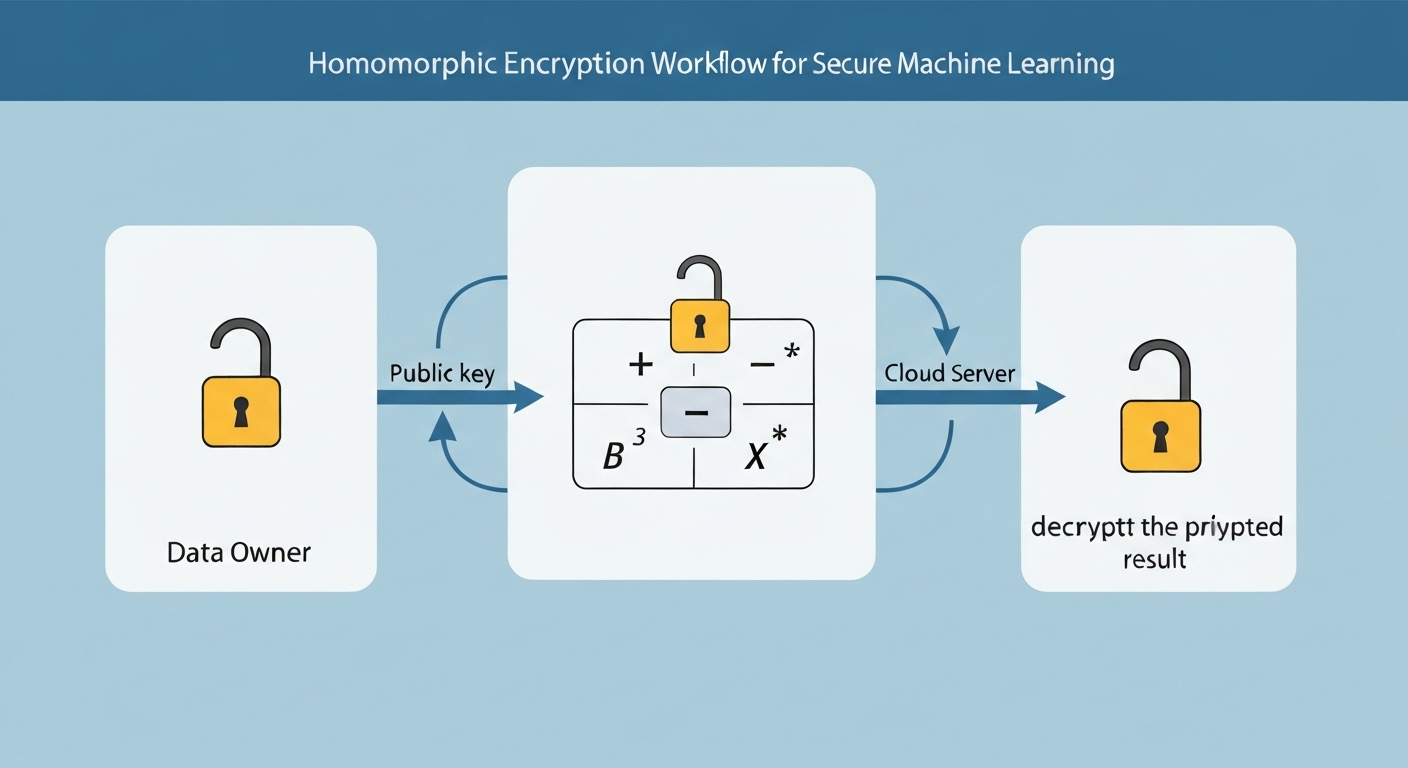

Homomorphic Encryption

Homomorphic encryption allows computations to be performed on encrypted data without decrypting it first. This means that machine learning models can be trained on encrypted data, and the results can be decrypted only by authorized parties. Homomorphic encryption offers a strong level of machine learning security.

Secure Multi-Party Computation (SMPC)

Secure multi-party computation enables multiple parties to jointly compute a function over their private inputs without revealing those inputs to each other. SMPC protocols can be used to train machine learning models collaboratively while protecting the confidentiality of each party’s data. SMPC is particularly useful for scenarios requiring strict data privacy.

Data Anonymization and Pseudonymization

Data anonymization removes or modifies identifying information to make it impossible to re-identify individuals. Pseudonymization replaces identifying information with pseudonyms, which can be reversed under certain conditions. These techniques reduce the risk of privacy breaches but require careful consideration to maintain data utility. Effective data anonymization is critical for compliance.

Confidential Computing

Confidential computing uses hardware-based security to protect data in use. This involves running computations in a trusted execution environment (TEE), such as Intel SGX, which isolates the code and data from the rest of the system. This protects the data from unauthorized access, even if the system is compromised. Confidential computing bolsters machine learning security by protecting data during processing.

Best Practices for Securely Sharing Data

In addition to choosing the right techniques, implementing robust data governance policies and following best practices are essential for securely sharing data for machine learning.

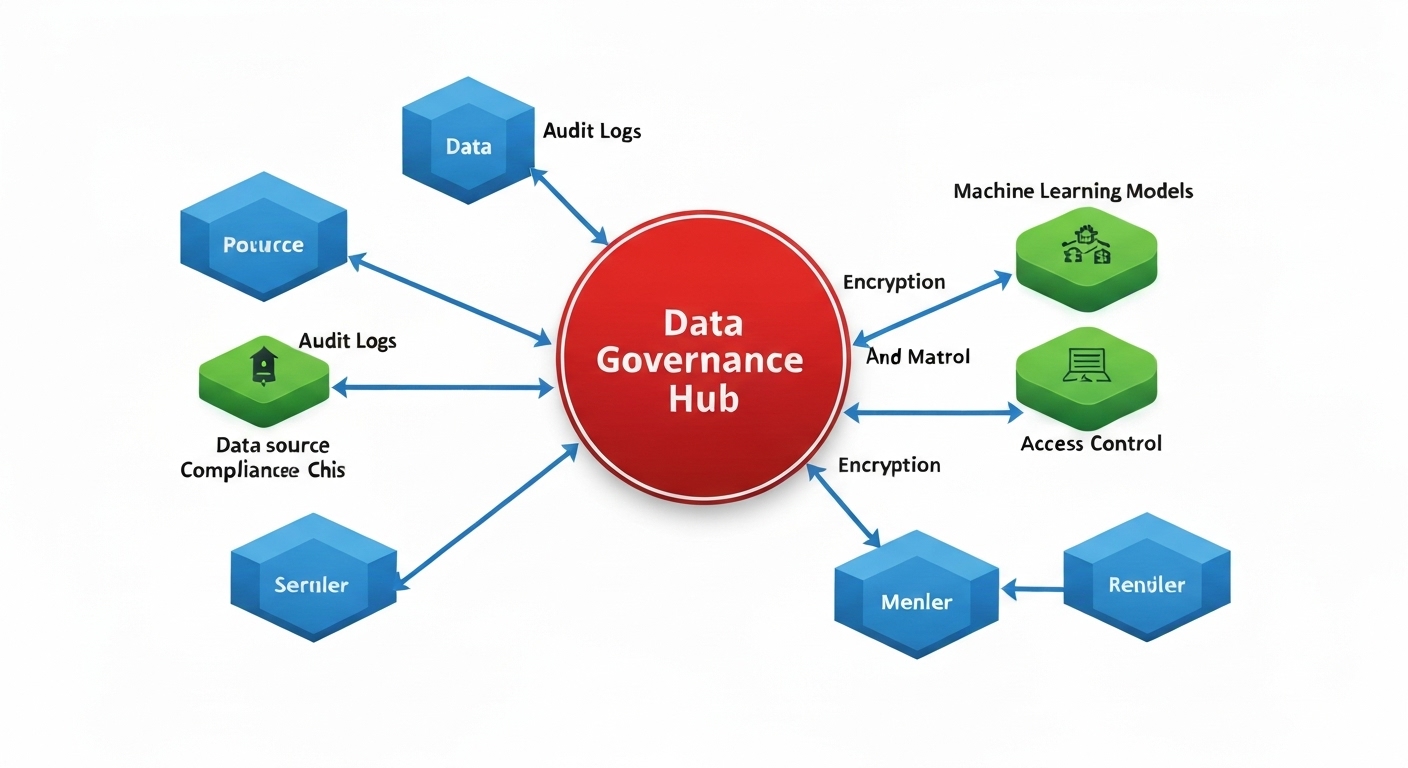

Establish Clear Data Governance Policies

Define clear roles and responsibilities for data access, usage, and sharing. Implement data access controls and audit trails to track data usage and ensure compliance with policies. Comprehensive data governance is foundational to secure data sharing.

Implement Data Minimization

Only share the minimum amount of data necessary for the intended purpose. Avoid sharing unnecessary attributes that could increase the risk of re-identification or privacy breaches. Practicing data minimization aligns with AI ethics and legal requirements.

Use Secure Communication Channels

Employ encrypted communication channels for transferring data and model updates. This protects data from interception and unauthorized access during transmission. Secure channels are crucial for maintaining data security.

Regularly Audit and Monitor Data Access

Conduct regular audits to ensure compliance with data governance policies and identify potential security vulnerabilities. Monitor data access patterns for suspicious activity and implement appropriate security measures. Proactive monitoring strengthens data privacy safeguards.

Provide Training on Data Security and Privacy

Educate employees and collaborators on data security and privacy best practices. Raise awareness about the risks of data breaches and the importance of following data governance policies. A well-trained workforce is essential for maintaining data privacy.

Leverage Secure Data Enclaves

Secure data enclaves provide a controlled environment for sharing and analyzing sensitive data. These enclaves offer enhanced security features, such as access controls, encryption, and audit logging, to protect data from unauthorized access and misuse. Consider the benefits of secure data enclaves for sensitive securely sharing data for machine learning scenarios.

Conclusion

Securely sharing data for machine learning is paramount for fostering innovation while protecting sensitive information. By adopting the right techniques, implementing robust data governance policies, and following best practices, organizations can unlock the potential of collaborative AI projects without compromising data security and privacy. Techniques like federated learning, differential privacy, homomorphic encryption, and secure multi-party computation provide viable solutions for various data sharing scenarios. Embracing these approaches will enable the responsible and ethical development of machine learning applications. For more information on data security, visit nist.gov. Learn more about secure cloud solutions at flashs.cloud.

HOTLINE

+84372 005 899