How to Secure Machine Learning Systems : A Comprehensive Guide

How to secure machine learning systems is a critical concern in today’s AI-driven world. As machine learning (ML) models become increasingly integrated into various applications, from healthcare to finance, ensuring their security is paramount to protect sensitive data, maintain system integrity, and prevent malicious attacks.

Understanding the Security Risks in Machine Learning

Machine learning systems are vulnerable to a range of security threats that can compromise their functionality and reliability. Understanding these risks is the first step toward implementing effective security measures. These threats can be broadly categorized as data poisoning attacks, adversarial attacks, and model inversion attacks.

Data Poisoning Attacks

Data poisoning attacks involve injecting malicious data into the training dataset to manipulate the model’s behavior. This can lead to biased predictions or even complete model failure. For example, attackers might introduce fake transactions to skew fraud detection models or insert misleading medical records to compromise diagnostic accuracy. Mitigation strategies include data validation, anomaly detection, and robust statistical techniques to identify and remove poisoned data. Employing secure data pipelines and access controls is also crucial.

Adversarial Attacks

Adversarial attacks involve creating inputs that are specifically designed to fool the model. These inputs, often referred to as adversarial examples, are subtly modified to cause the model to make incorrect predictions. For instance, adding a small amount of noise to an image can cause an image recognition model to misclassify it. Defending against adversarial attacks requires techniques such as adversarial training, input sanitization, and defensive distillation. Understanding the specific vulnerabilities of the model and the types of attacks it is likely to face is essential. NIST offers resources on AI risk management.

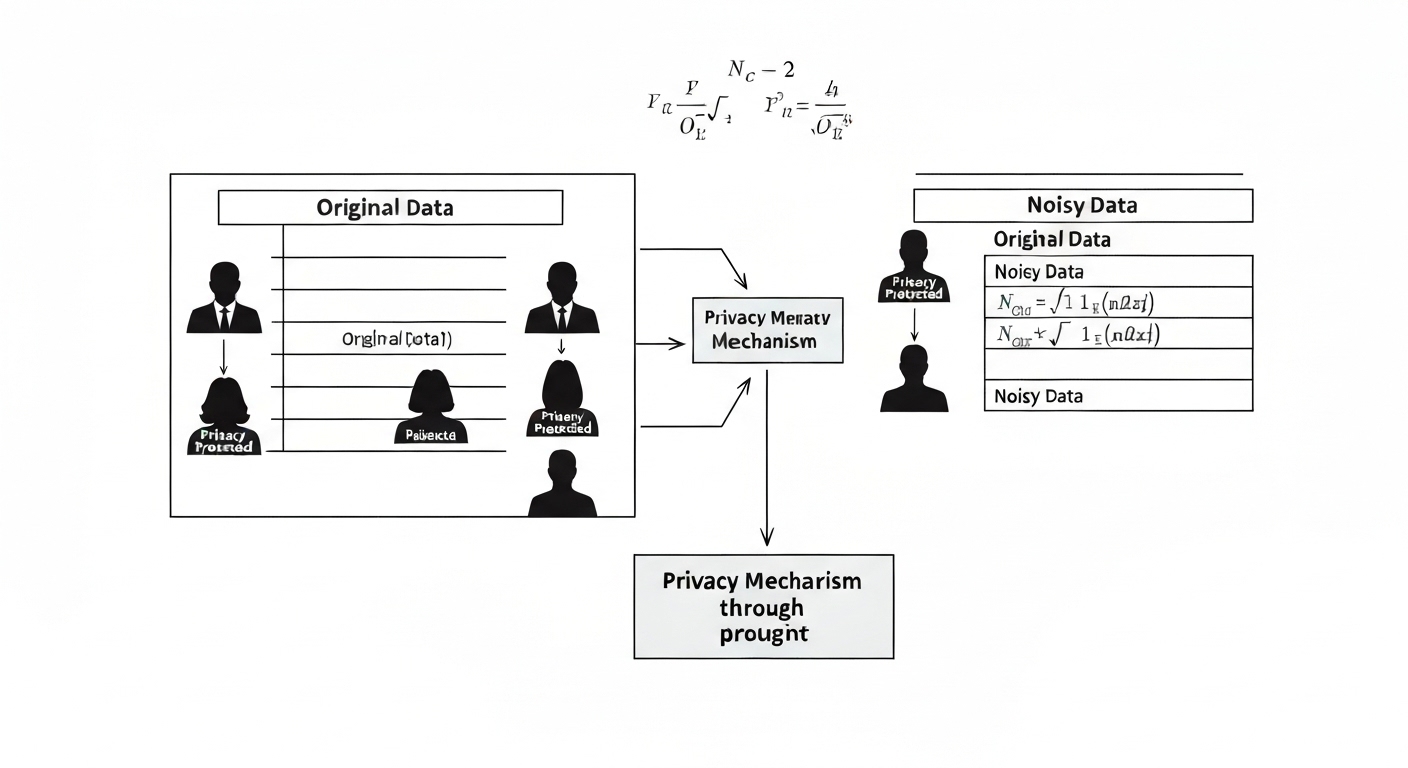

Model Inversion Attacks and Model Privacy

Model inversion attacks aim to extract sensitive information about the training data from the model itself. By querying the model with carefully crafted inputs, attackers can infer private attributes of the training data. This is particularly concerning when dealing with sensitive data such as medical records or financial information. To protect against model inversion attacks, techniques such as differential privacy, federated learning, and model obfuscation can be employed. These methods aim to reduce the amount of information that can be extracted from the model without significantly impacting its performance.

Implementing Machine Learning Security Best Practices

Securing machine learning systems requires a multi-faceted approach that addresses various aspects of the ML lifecycle, from data collection and training to deployment and monitoring. Here are some best practices to consider:

- Secure Data Handling: Implement strict access controls and encryption to protect sensitive data. Regularly audit data pipelines to identify and mitigate vulnerabilities.

- Robust Model Training: Use robust training techniques such as adversarial training and data augmentation to improve the model’s resilience to attacks. Validate data integrity throughout the training process.

- Secure Model Deployment: Deploy models in secure environments with appropriate access controls and monitoring. Use secure communication protocols to protect against eavesdropping and tampering.

- Regular Monitoring and Auditing: Continuously monitor the model’s performance and behavior to detect anomalies and potential attacks. Conduct regular security audits to identify and address vulnerabilities.

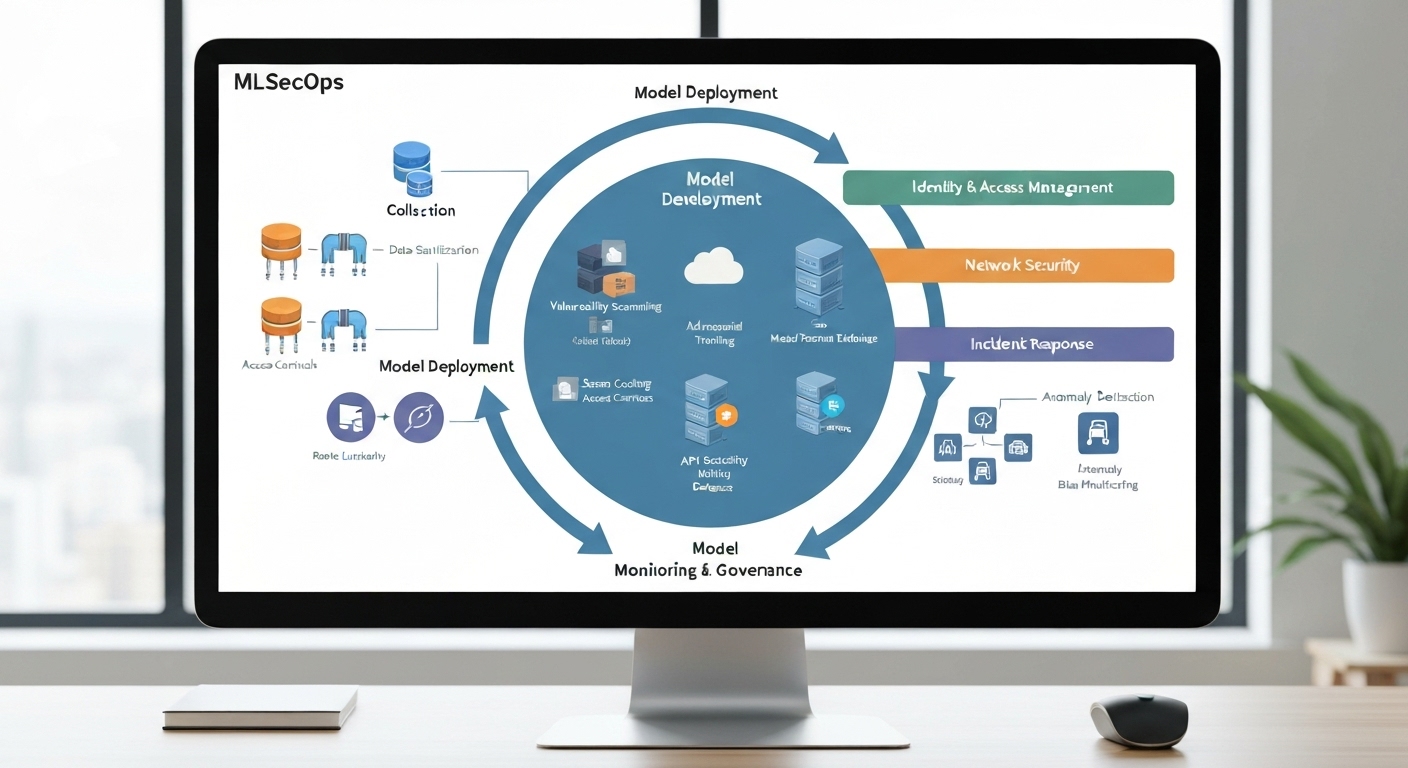

- MLSecOps Integration: Adopt an MLSecOps approach to integrate security practices into the entire machine learning lifecycle, fostering collaboration between data scientists, security engineers, and operations teams.

Data Validation and Sanitization

Data validation and sanitization are critical for preventing data poisoning attacks. Implement robust validation rules to ensure that input data conforms to expected formats and ranges. Sanitize data to remove or neutralize potentially malicious content. Employ anomaly detection techniques to identify and flag suspicious data points that deviate from the norm. Securely handling user input and preventing SQL injection-style attacks are paramount in web applications leveraging ML. Flashs.cloud offers solutions for secure data handling.

How to Secure Machine Learning Systems Against Adversarial Examples

Protecting against adversarial examples requires a combination of defensive techniques. Adversarial training involves training the model on adversarial examples to make it more robust to future attacks. Input sanitization involves pre-processing inputs to remove or reduce the impact of adversarial perturbations. Defensive distillation involves training a new model on the output of a previously trained model to smooth the decision boundaries and make it more difficult to craft adversarial examples. Regularly evaluating your model against different types of adversarial attacks is essential to identify vulnerabilities and assess the effectiveness of your defenses.

Model Security and Access Control

Implementing strict access controls is crucial for protecting models from unauthorized access and tampering. Limit access to model files and deployment environments to authorized personnel only. Use strong authentication and authorization mechanisms to verify the identity of users and control their access privileges. Implement audit logging to track access to models and identify any suspicious activity. Secure your model registry to prevent unauthorized modifications or deletions. Secure coding practices and regular security assessments can help ensure model integrity. Consider implementing multi-factor authentication for sensitive operations related to model management.

Advanced Security Techniques for ML Systems

Beyond basic security measures, advanced techniques can further enhance the security of machine learning systems. These include differential privacy, federated learning, and homomorphic encryption.

Differential Privacy

Differential privacy is a technique that adds noise to the training data or model outputs to protect the privacy of individual data points. By carefully controlling the amount of noise added, it is possible to limit the amount of information that can be learned about any individual data point without significantly impacting the model’s overall performance. Differential privacy can be particularly useful when dealing with sensitive data such as medical records or financial information. Techniques like adding Laplace noise to aggregate queries or using randomized response mechanisms can help achieve differential privacy.

Federated Learning

Federated learning is a distributed learning approach that allows models to be trained on decentralized data without directly accessing the data itself. Instead of sending the data to a central server, the model is trained locally on each device or data source, and only the model updates are shared with the central server. This can significantly reduce the risk of data breaches and improve data privacy. Federated learning is particularly useful in scenarios where data is distributed across multiple devices or organizations, such as in healthcare or IoT applications. Techniques like secure aggregation and differential privacy can further enhance the privacy and security of federated learning.

Homomorphic Encryption

Homomorphic encryption allows computations to be performed on encrypted data without decrypting it. This means that data can be processed securely without ever being exposed in its plaintext form. Homomorphic encryption is a powerful tool for protecting sensitive data in machine learning applications, particularly when data is processed in untrusted environments such as the cloud. While computationally expensive, homomorphic encryption is becoming increasingly practical for certain types of machine learning tasks. Current research focuses on improving the efficiency and scalability of homomorphic encryption techniques.

Conclusion

Securing machine learning systems is an ongoing challenge that requires a proactive and adaptive approach. By understanding the security risks, implementing best practices, and leveraging advanced security techniques, it is possible to protect ML models, data, and infrastructure from attacks. As machine learning continues to evolve, security must remain a top priority to ensure the responsible and trustworthy deployment of AI-powered systems.

HOTLINE

+84372 005 899