How to Optimize Crawl Budget : A Comprehensive Guide

How to optimize crawl budget is a crucial aspect of technical SEO. Search engine crawlers have a limited ‘budget’ for each website, determining how many pages they’ll crawl within a given timeframe. Efficiently managing this budget ensures that your most important content is crawled and indexed, while less important or problematic pages are ignored.

Understanding Crawl Budget

Crawl budget isn’t just about the number of pages crawled; it’s about efficiency. Google and other search engines allocate resources based on a website’s perceived value and crawl health. Several factors influence your crawl budget, including:

- Crawl Rate Limit: How many requests per second a crawler can make to your server without causing performance issues.

- Crawl Demand: How much Google wants to crawl your site, based on its popularity, freshness, and perceived quality.

Ignoring crawl budget can lead to significant SEO issues, such as new content not being indexed promptly, important pages being missed, and wasted resources on crawling low-value pages.

Why is Crawl Budget Optimization Important?

Crawl budget optimization is important for several reasons:

- Faster Indexing: Ensures new and updated content is quickly discovered and indexed by search engines.

- Improved SEO: Helps search engines prioritize your most important pages, improving overall SEO performance.

- Reduced Server Load: Prevents crawlers from overwhelming your server with unnecessary requests.

- Better User Experience: By focusing on high-quality content, you improve the user experience, leading to higher engagement and conversions.

Diagnosing Crawl Budget Issues

Before you can optimize your crawl budget, you need to identify potential problems. Here are some common signs of crawl budget inefficiency:

- Slow Indexing: New pages taking a long time to appear in search results.

- Crawl Errors: A high number of 404 errors, server errors, or soft 404s in Google Search Console.

- Wasted Crawl: Crawlers spending time on low-value pages, such as duplicate content, faceted navigation, or outdated content.

You can use tools like Google Search Console, server log analysis, and website crawlers to identify these issues. Google Search Console provides valuable insights into crawl stats, errors, and indexed pages. Analyzing server logs helps you understand how crawlers are interacting with your website.

Strategies for Crawl Budget Optimization

Now that you understand the importance of crawl budget and how to diagnose issues, let’s explore effective optimization strategies:

1. Optimize Your Robots.txt File

The robots.txt file is your first line of defense in controlling crawler access. Use it to block access to:

- Duplicate Content: Prevent crawlers from indexing duplicate versions of your pages.

- Low-Value Pages: Exclude pages that don’t contribute to your SEO, such as admin pages, staging environments, or resource files.

- Parameter-Based URLs: Block URLs with unnecessary parameters that don’t change the page content.

However, be careful when using robots.txt. Blocking important pages can prevent them from being indexed. Use it strategically to guide crawlers towards your valuable content.

2. Manage URL Parameters

URL parameters can significantly impact crawl budget. Faceted navigation, tracking parameters, and session IDs can create numerous variations of the same page, wasting crawl resources. Use the URL Parameters tool in Google Search Console to tell Google how to handle specific parameters. Alternatively, use canonical tags to specify the preferred version of a page with parameters.

3. Eliminate Duplicate Content

Duplicate content is a major drain on crawl budget. Search engines don’t want to waste time crawling multiple versions of the same content. Identify and eliminate duplicate content using:

- Canonical Tags: Specify the preferred version of a page when multiple URLs have the same content.

- 301 Redirects: Redirect duplicate URLs to the preferred version.

- Noindex Tag: Use the

noindexmeta tag to prevent search engines from indexing duplicate pages (use with caution).

4. Fix Broken Links

Broken links create a poor user experience and waste crawl budget. Crawlers will spend time trying to access non-existent pages, which is inefficient. Regularly scan your website for broken links and fix them promptly. Use tools like Screaming Frog or Ahrefs to identify broken links.

5. Optimize Your Sitemap

A well-structured sitemap helps search engines discover and crawl your important pages. Ensure your sitemap is up-to-date, accurate, and includes only indexable pages. Submit your sitemap to Google Search Console to help Google understand your website’s structure.

6. Improve Website Speed

Website speed is a crucial ranking factor and impacts crawl budget. Faster websites allow crawlers to crawl more pages within their allocated time. Optimize your website speed by:

- Optimizing Images: Compress images to reduce file size without sacrificing quality.

- Leveraging Browser Caching: Enable browser caching to store static assets on users’ devices.

- Minifying CSS and JavaScript: Reduce the size of your CSS and JavaScript files by removing unnecessary characters.

- Using a Content Delivery Network (CDN): Distribute your website’s content across multiple servers to improve loading times for users around the world.

7. Prioritize High-Quality Content

Focus on creating high-quality, unique, and engaging content. Search engines are more likely to crawl and index content that provides value to users. Regularly update your content to keep it fresh and relevant. This also indirectly answers the query of searchenginejournal.com about crawl optimization and content marketing.

8. Monitor and Adjust

Crawl budget optimization is an ongoing process. Continuously monitor your crawl stats in Google Search Console and adjust your strategies as needed. Pay attention to crawl errors, wasted crawl, and indexing issues. Adapt your approach based on the data you collect.

Advanced Crawl Budget Techniques

Beyond the basic strategies, consider these advanced techniques for more granular control over your crawl budget:

1. Implementing HTTP/2

HTTP/2 allows multiple requests to be sent over a single connection, reducing overhead and improving website speed. This can help crawlers crawl more pages within their budget.

2. Using Conditional GET Requests

Conditional GET requests allow crawlers to check if a page has changed since their last visit. If the page hasn’t changed, the server can respond with a 304 Not Modified status, saving crawl resources.

3. AJAX Crawling (Deprecated, but Relevant for Legacy Sites)

For websites that rely heavily on JavaScript, ensure that search engines can crawl and render your content correctly. While Google has improved its JavaScript crawling capabilities, it’s still important to follow best practices for AJAX crawling.

4. Internal Linking Optimization

Strategic internal linking helps search engine crawlers discover and prioritize your important pages. Ensure your internal links are relevant, descriptive, and point to high-quality content.

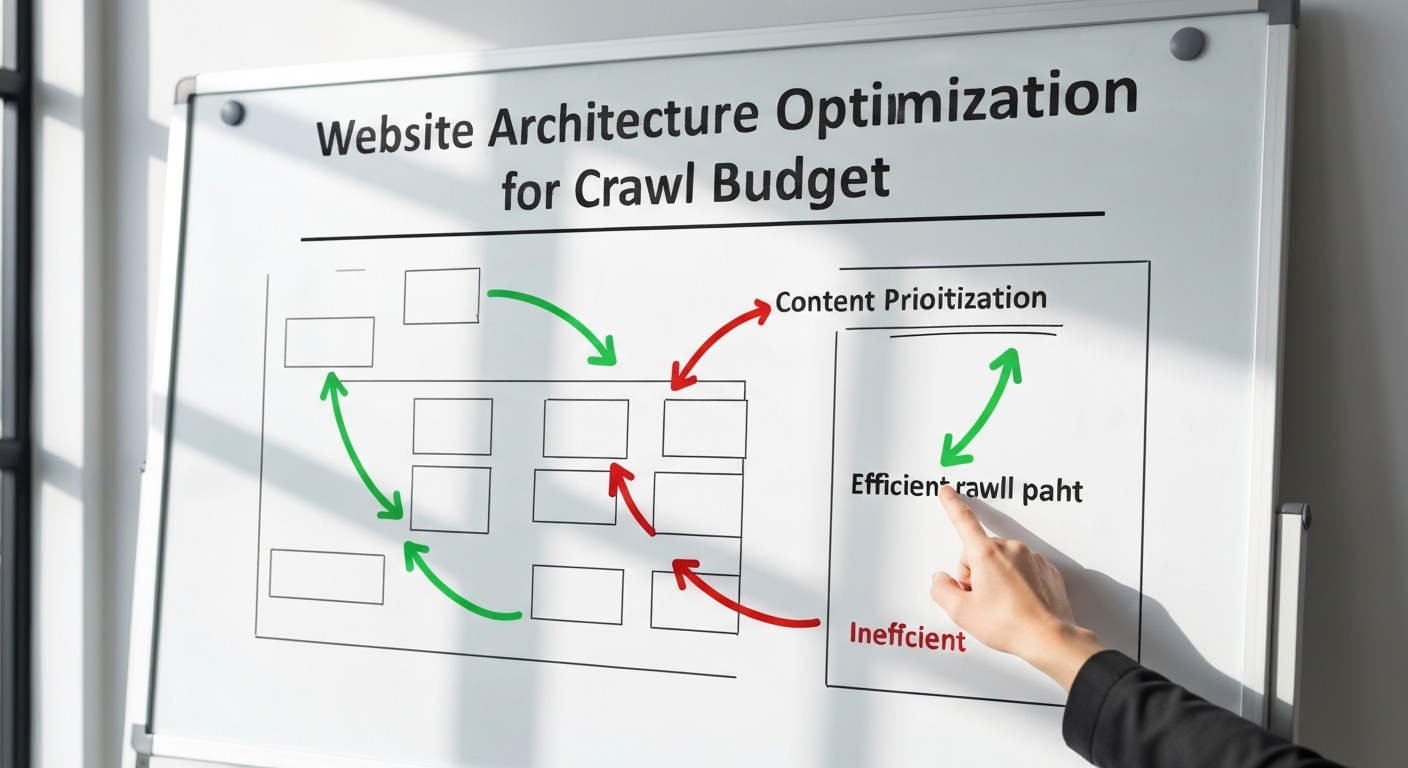

Crawl Budget and Website Architecture

Your website’s architecture plays a significant role in crawl budget efficiency. A well-organized website with a clear hierarchy makes it easier for crawlers to discover and index your content. Consider these architectural principles:

- Flat Architecture: Reduce the number of clicks required to reach important pages.

- Logical Navigation: Create a clear and intuitive navigation structure that guides users and crawlers.

- Internal Linking: Use internal links to connect related pages and improve crawlability.

A well-structured website not only improves crawl budget but also enhances user experience, leading to higher engagement and conversions.

Conclusion

Knowing how to optimize crawl budget is a critical aspect of technical SEO. By understanding how search engines crawl and index your website, you can implement strategies to ensure that your most important content is discovered and ranked effectively. Optimizing your robots.txt file, managing URL parameters, eliminating duplicate content, fixing broken links, improving website speed, and creating high-quality content are essential steps in maximizing your crawl budget. Remember to continuously monitor your crawl stats and adjust your strategies as needed. By prioritizing crawl budget optimization, you can improve your website’s SEO performance and achieve better search engine rankings.

HOTLINE

+84372 005 899