How Machine Learning Improves Data Privacy : Techniques and Applications

How Machine Learning Improves Data Privacy

How machine learning improves data privacy is a question of increasing importance in today’s data-driven world. While often viewed as a potential threat to privacy, machine learning (ML) also offers powerful tools and techniques to enhance data protection. This article explores several ways in which machine learning can be leveraged to safeguard sensitive information while still enabling valuable insights and analysis.

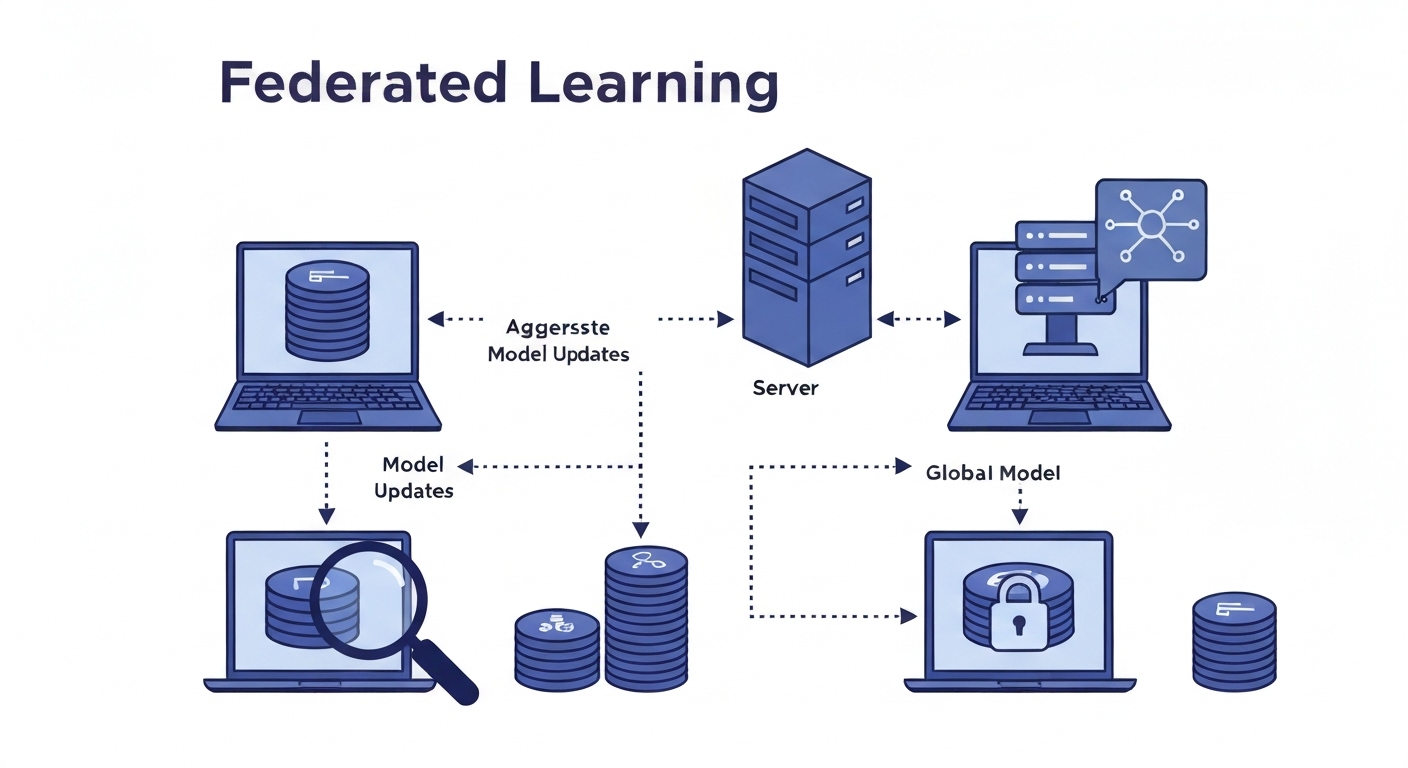

The Role of Federated Learning in Protecting Data

Federated learning is a distributed machine learning approach that allows models to be trained on decentralized data sources without directly accessing or sharing the raw data. This is particularly useful when dealing with sensitive data stored on individual devices or within different organizations. Instead of centralizing the data, federated learning brings the model to the data, training it locally and then aggregating the model updates. This aggregated model is then shared, improving overall performance without compromising individual privacy.

Benefits of Federated Learning

- Enhanced Privacy: Data remains on the user’s device or within the organization’s control.

- Reduced Data Transfer: Minimizes the need to transfer large datasets, reducing the risk of data breaches during transmission.

- Improved Model Generalization: Training on diverse, decentralized data can lead to more robust and generalizable models.

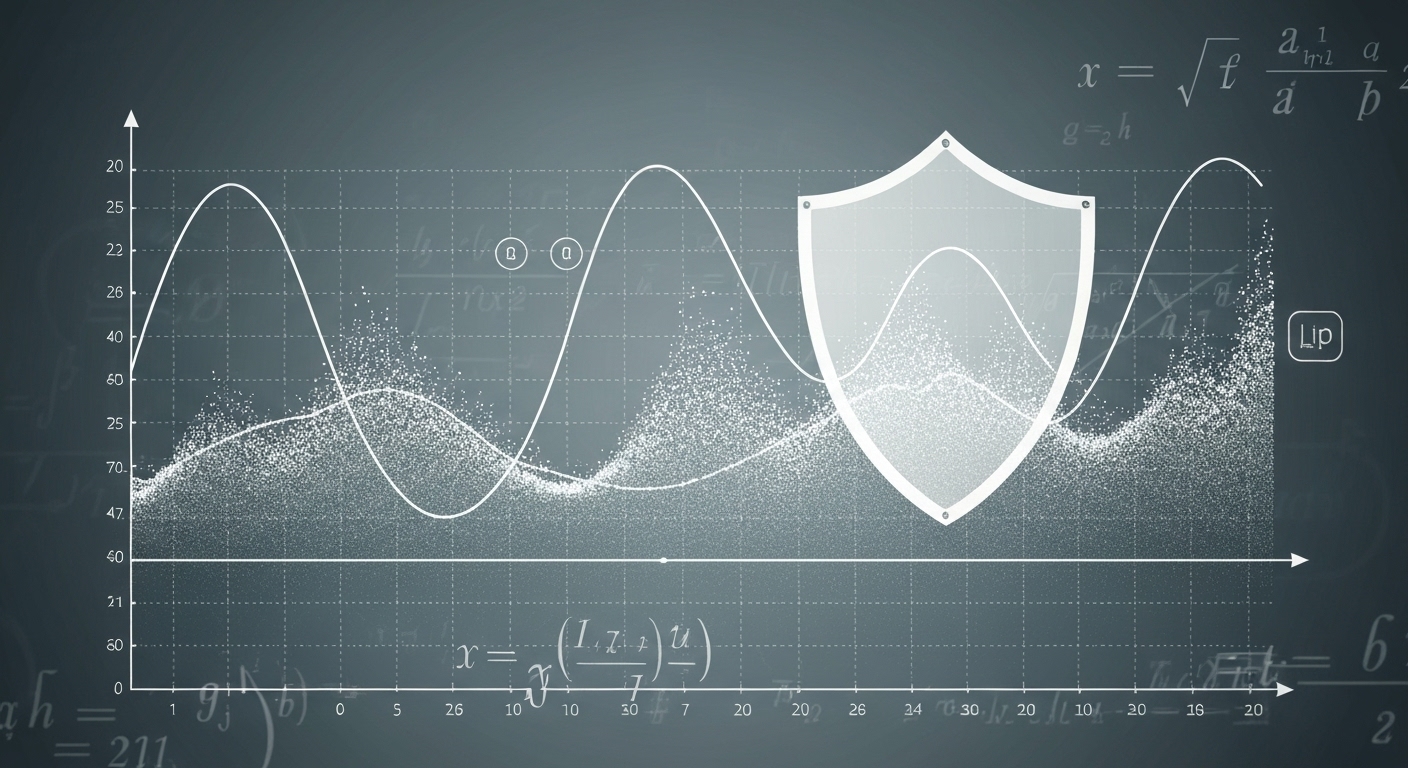

Differential Privacy: Adding Noise for Enhanced Security

Differential privacy is a technique that adds carefully calibrated noise to data or model outputs to protect the privacy of individuals represented in the dataset. The goal is to ensure that the presence or absence of any single individual’s data does not significantly affect the outcome of the analysis. This makes it difficult for attackers to infer sensitive information about specific individuals.

Applications of Differential Privacy

- Statistical Analysis: Protects the privacy of individuals in statistical datasets while still allowing for accurate analysis.

- Model Training: Prevents models from memorizing specific training examples, reducing the risk of membership inference attacks.

- Data Release: Allows for the safe release of anonymized datasets for research and development purposes.

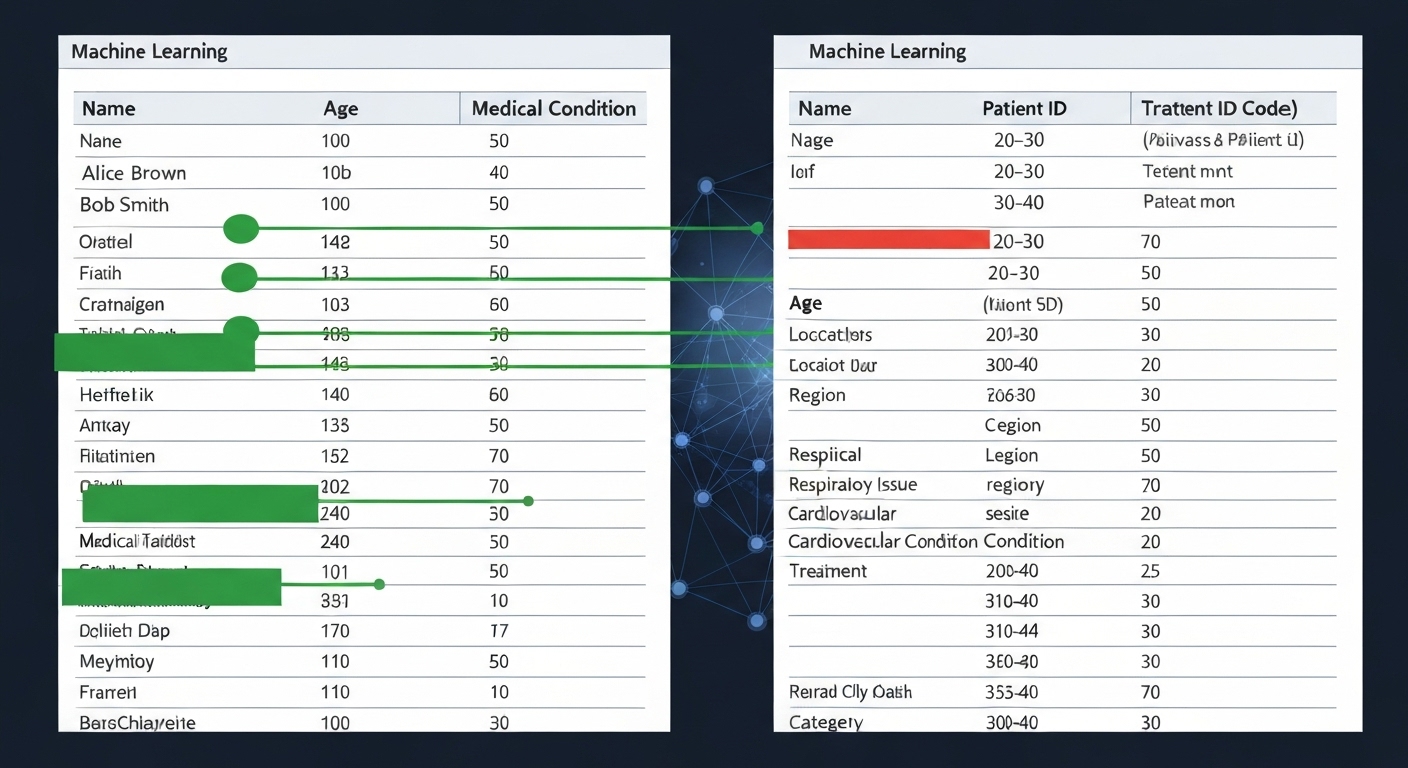

Data Anonymization Techniques Using Machine Learning

Data anonymization involves transforming data in such a way that it is no longer possible to identify individuals. Machine learning can be used to develop more sophisticated anonymization techniques that preserve data utility while protecting privacy. For example, ML algorithms can identify and redact sensitive attributes, generalize data values, or synthesize new data points that maintain the statistical properties of the original dataset.

Advanced Anonymization Methods

- k-Anonymity: Ensures that each record in a dataset is indistinguishable from at least k-1 other records.

- l-Diversity: Requires that each equivalence class (group of k-anonymous records) contains at least l well-represented values for sensitive attributes.

- t-Closeness: Guarantees that the distribution of sensitive attributes in each equivalence class is close to the distribution of the entire dataset.

Secure Multi-Party Computation (SMPC) for Collaborative Data Analysis

Secure Multi-Party Computation (SMPC) allows multiple parties to jointly compute a function over their private data without revealing their individual inputs to each other. This is achieved through cryptographic protocols that enable computation on encrypted data. SMPC can be used to train machine learning models collaboratively, even when the data is distributed across different organizations with strict privacy requirements.

One of the key applications of SMPC is in healthcare, where hospitals and research institutions can collaborate to analyze patient data without sharing sensitive medical records. This can lead to improved diagnostic models and more effective treatments while maintaining patient privacy.

AI Ethics and Data Governance

Ethical considerations are crucial when using machine learning to improve data privacy. Organizations must establish clear data governance policies that address issues such as data collection, storage, and usage. These policies should align with relevant regulations like GDPR and CCPA, and should be regularly reviewed and updated to reflect evolving best practices.

Transparency and accountability are also essential. Users should be informed about how their data is being used and have the right to access, correct, and delete their data. Organizations should also be accountable for any breaches of data privacy and should implement measures to prevent future incidents.

Machine Learning Security and Privacy-Preserving Techniques

Enhancing machine learning security can significantly improve data privacy. Techniques like adversarial training can make models more robust against attacks that attempt to extract sensitive information or manipulate model outputs. Furthermore, privacy-preserving machine learning (PPML) techniques, which combine machine learning with cryptographic methods, provide strong guarantees of data privacy.

PPML techniques include homomorphic encryption, which allows computations to be performed on encrypted data without decrypting it, and secure aggregation, which enables the aggregation of encrypted data without revealing individual contributions. These techniques can be used to train and deploy machine learning models in a privacy-preserving manner.

Real-World Applications and Case Studies

Several organizations are already leveraging machine learning to improve data privacy in various domains. For example, healthcare providers are using federated learning to train diagnostic models on patient data without sharing sensitive medical records. Financial institutions are using differential privacy to analyze transaction data while protecting the privacy of their customers. And government agencies are using data anonymization techniques to release statistical datasets for research purposes.

These real-world applications demonstrate the potential of machine learning to enhance data privacy while still enabling valuable insights and analysis. As machine learning technology continues to evolve, we can expect to see even more innovative solutions that balance the need for data privacy with the desire to extract value from data.

Conclusion

In conclusion, how machine learning improves data privacy is achieved through various innovative techniques. Federated learning, differential privacy, data anonymization, and secure multi-party computation are just a few examples of how ML can be used to protect sensitive information. As data privacy becomes increasingly important, these techniques will play a crucial role in ensuring that data is used responsibly and ethically. Implementing robust data governance policies and staying informed about the latest advancements in machine learning security and privacy-preserving techniques are essential for organizations that want to leverage the power of data while safeguarding the privacy of individuals.

Learn more about cloud solutions at flashs.cloud.

For further reading on GDPR compliance, visit the gdpr.eu website.

HOTLINE

+84372 005 899