What is a Robots . txt File and How to Use It : A Comprehensive Guide

what is a robots.txt file and how to use it? This is a critical question for anyone involved in website management and search engine optimization (SEO). A robots.txt file acts as a set of instructions for web robots, specifically search engine crawlers, telling them which parts of your website they should or shouldn’t access. Understanding and properly implementing this file is crucial for managing your website’s crawl budget, preventing the indexing of sensitive or unimportant pages, and ultimately improving your SEO performance.

Understanding the Basics: What is a Robots.txt File?

At its core, a robots.txt file is a plain text file located in the root directory of your website. It uses a specific syntax to communicate directives to web robots, primarily search engine crawlers like Googlebot, Bingbot, and others. These crawlers use these instructions to determine which pages to crawl and index, and which to ignore. Think of it as a polite request to the search engines, guiding their behavior on your site.

The Importance of Robots.txt for SEO

A properly configured robots.txt file can significantly impact your website’s SEO in several ways:

- Crawl Budget Optimization: Search engines allocate a certain amount of ‘crawl budget’ to each website. A robots.txt file helps you direct crawlers to your most important pages, ensuring that valuable content is indexed and preventing them from wasting resources on unimportant or duplicate content.

- Preventing Indexing of Sensitive Content: You can use robots.txt to prevent search engines from indexing private areas of your site, such as admin panels, internal search results pages, or staging environments.

- Avoiding Duplicate Content Issues: By disallowing crawlers from accessing certain URL parameters or dynamically generated pages, you can prevent duplicate content issues that can negatively impact your search rankings.

Robots.txt Syntax and Directives

The robots.txt file uses a simple syntax consisting of ‘User-agent’ and ‘Disallow’ (and sometimes ‘Allow’) directives.

User-agent: Specifying the Target Robot

The User-agent directive specifies which web robot the following rules apply to. You can target specific crawlers (e.g., User-agent: Googlebot) or all crawlers (User-agent: *). The asterisk (*) is a wildcard that matches all user agents.

Disallow: Blocking Access to Specific Pages or Directories

The Disallow directive tells the specified user-agent not to access the specified URL or directory. For example, Disallow: /private/ would prevent crawlers from accessing any files or folders within the ‘private’ directory.

Allow: Explicitly Allowing Access (Less Common)

The Allow directive, while less commonly used, explicitly allows a user-agent to access a specific URL or directory, even if it falls within a broader Disallow rule. This can be useful for fine-tuning access control.

Sitemap: Declaring Your Sitemap’s Location

The Sitemap directive declares the location of your XML sitemap. While not technically a restriction directive, it helps search engines discover and crawl your website’s pages more efficiently. It is highly recommended to include a sitemap directive in your robots.txt file.

Robots.txt Examples and Common Use Cases

Let’s look at some practical examples of how to use robots.txt directives:

Example 1: Blocking Access to an Entire Directory

User-agent: *

Disallow: /admin/This example blocks all search engine crawlers from accessing the ‘admin’ directory and all its contents.

Example 2: Blocking Access to a Specific Page

User-agent: *

Disallow: /private/secret.htmlThis example prevents crawlers from accessing the ‘secret.html’ file located in the ‘private’ directory.

Example 3: Allowing Googlebot to Access a Specific Directory While Disallowing All Others

User-agent: *

Disallow: /

User-agent: Googlebot

Allow: /images/This example blocks all crawlers from accessing the entire site, except for Googlebot, which is allowed to access the ‘images’ directory. Note that this is generally not recommended unless you have a very specific reason to do so.

Example 4: Using a Crawl Delay

While not supported by all search engines (Google, in particular, doesn’t support it), you can suggest a crawl delay:

User-agent: *

Crawl-delay: 10This suggests a 10-second delay between crawl requests. Be aware that this directive might be ignored.

Robots.txt Best Practices

Following these best practices will help you create effective and efficient robots.txt files:

- Place the robots.txt file in the root directory: The file must be located at the root of your domain (e.g.,

https://www.example.com/robots.txt). - Use a plain text editor: Create and edit the robots.txt file using a simple text editor like Notepad (Windows) or TextEdit (Mac). Avoid using word processors, as they may add formatting that can cause errors.

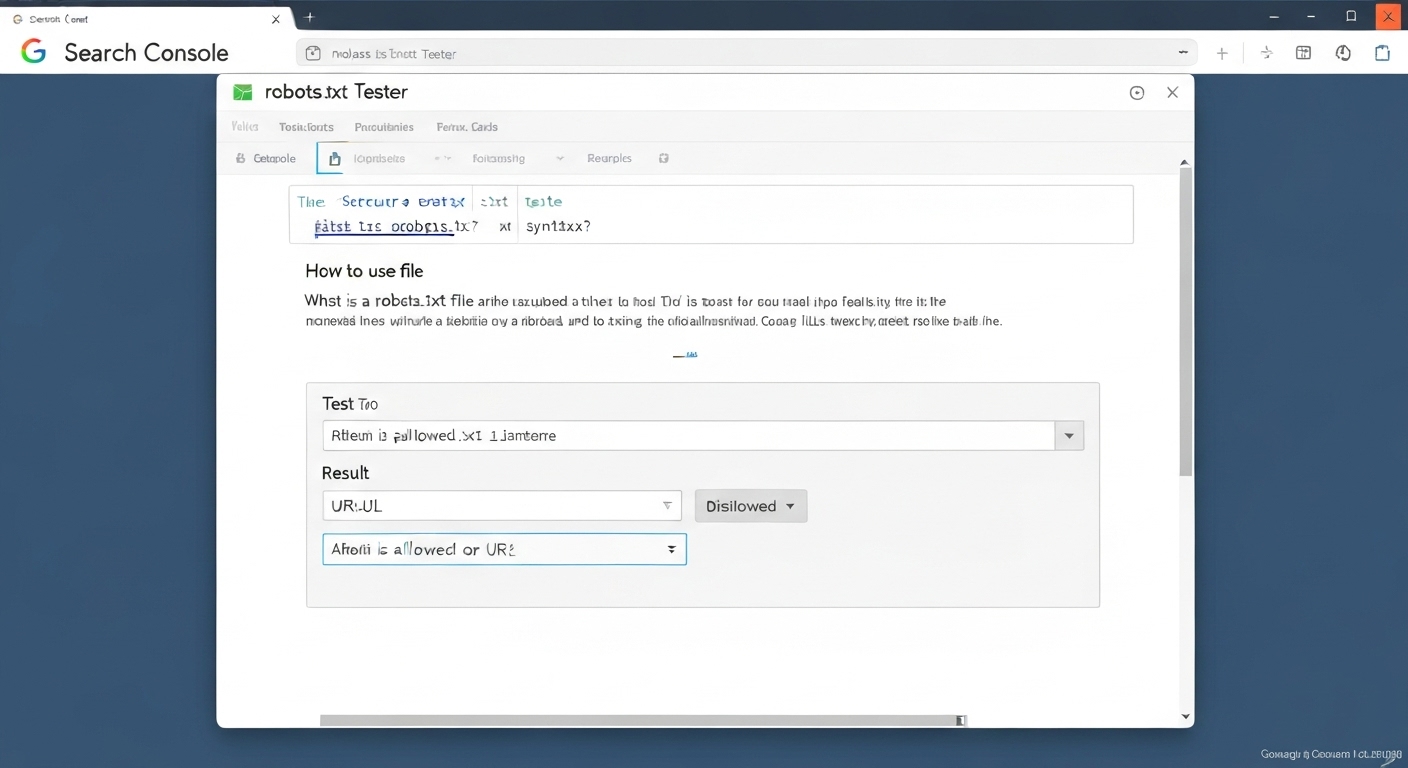

- Test your robots.txt file: Use tools like Google Search Console’s robots.txt tester to verify that your rules are working as intended.

- Be specific with your directives: Avoid using overly broad

Disallowrules that could unintentionally block access to important content. - Use comments to explain your rules: Add comments to your robots.txt file to explain the purpose of each rule. This will help you and others understand the file’s logic in the future. Comments start with a ‘#’.

- Remember that robots.txt is a suggestion, not a directive: While most reputable search engines will respect your robots.txt file, malicious bots or rogue crawlers may ignore it. For true security, use password protection or other access control mechanisms.

How to Create and Implement a Robots.txt File

Creating and implementing a robots.txt file is a straightforward process:

- Create the robots.txt file: Open a plain text editor and create a new file named ‘robots.txt’.

- Add your directives: Add the necessary

User-agent,Disallow,Allow, andSitemapdirectives to the file, following the syntax described above. - Save the file: Save the file as ‘robots.txt’ in UTF-8 encoding.

- Upload the file to your website’s root directory: Upload the robots.txt file to the root directory of your website using an FTP client or your web hosting control panel.

- Test the file: Verify that the robots.txt file is accessible by visiting

https://www.example.com/robots.txtin your web browser (replace ‘www.example.com’ with your actual domain name). Also, use a testing tool to confirm that your rules are working correctly.

Tools for Creating and Testing Robots.txt Files

Several tools can assist you in creating and testing robots.txt files:

- Google Search Console: Provides a robots.txt tester tool that allows you to verify your syntax and test whether specific URLs are blocked.

- Various online robots.txt generators: These tools provide a user-friendly interface for creating robots.txt files based on your specific needs. However, always review the generated file carefully before implementing it.

Common Mistakes to Avoid

Here are some common mistakes to avoid when working with robots.txt files:

- Blocking important content: Accidentally disallowing access to crucial pages can prevent them from being indexed and negatively impact your search rankings.

- Using incorrect syntax: Errors in your robots.txt syntax can render your directives ineffective.

- Assuming robots.txt provides security: As mentioned earlier, robots.txt is not a security measure. Use password protection or other access control mechanisms to protect sensitive content.

- Forgetting to update the file: Make sure to update your robots.txt file whenever you make significant changes to your website’s structure or content.

Advanced Robots.txt Techniques

Beyond the basic directives, you can use some advanced techniques to fine-tune your robots.txt file:

Using Wildcards

You can use wildcards (*) to match patterns in URLs. For example, Disallow: /*.php$ would block access to all PHP files.

Blocking Specific URL Parameters

You can block crawlers from accessing URLs with specific parameters. This can be useful for preventing duplicate content issues caused by tracking parameters or session IDs.

Using Regular Expressions (Limited Support)

Some search engines support the use of regular expressions in robots.txt directives, allowing for more complex matching patterns. However, support for regular expressions is limited and may vary between search engines.

The Future of Robots.txt

The robots.txt standard has been around for a long time, and while it’s still widely used, it’s not without its limitations. Google has proposed a standardized version of robots.txt, but adoption has been slow. As search engine technology evolves, it’s possible that the robots.txt standard will be updated or replaced with a more sophisticated mechanism for controlling crawler behavior.

Conclusion

Understanding what is a robots.txt file and how to use it is essential for effective website management and search engine optimization. By carefully crafting your robots.txt file, you can control how search engine crawlers access your website, optimize your crawl budget, prevent the indexing of sensitive content, and ultimately improve your SEO performance. Remember to follow best practices, test your file thoroughly, and stay up-to-date with the latest developments in the world of SEO and web crawling. Always remember that while robots.txt is a valuable tool, it is not a security measure and should not be relied upon to protect sensitive information. For more information, you can refer to the official specifications at rfc-editor.org. And be sure to check out flashs.cloud for other great SEO resources.

HOTLINE

+84372 005 899