Automating Content Moderation with AI : A Comprehensive Guide

Automating content moderation with AI is rapidly becoming essential for online platforms striving to maintain safe and engaging environments. The sheer volume of user-generated content makes manual moderation unsustainable, necessitating the adoption of intelligent automated solutions.

The Growing Need for AI in Content Moderation

The explosion of online content, from social media posts to forum discussions and e-commerce product reviews, presents a massive challenge for platforms. Manually reviewing this content is time-consuming, expensive, and prone to human error. AI offers a scalable and efficient solution for detecting and removing harmful content, such as hate speech, harassment, and spam.

Benefits of Content Moderation Automation

- Improved Accuracy: AI algorithms can be trained to identify nuanced forms of harmful content that human moderators might miss.

- Reduced Costs: Automating moderation tasks significantly reduces the need for large teams of human moderators.

- Increased Efficiency: AI can process vast amounts of content in real-time, ensuring faster response times to violations.

- Enhanced User Experience: A safe and welcoming online environment fosters user engagement and loyalty.

- Scalability: AI solutions can easily scale to accommodate growing content volumes.

Automated content moderation helps create safer online environments.

How AI Powers Content Moderation Tools

AI-powered content moderation tools utilize various techniques, including natural language processing (NLP), machine learning (ML), and computer vision, to analyze and classify content. These tools can identify a wide range of violations, from explicit hate speech to subtle forms of harassment and misinformation.

Key AI Techniques Used in Content Moderation

- Natural Language Processing (NLP): Analyzes text to understand its meaning and sentiment, identifying potentially harmful language patterns.

- Machine Learning (ML): Enables algorithms to learn from data and improve their accuracy in detecting violations over time.

- Computer Vision: Identifies inappropriate or harmful content in images and videos.

- Deep Learning: A subset of machine learning that uses artificial neural networks with multiple layers to analyze data with greater complexity and accuracy.

These technologies enable the automatic detection of policy violations across text, images, and videos.

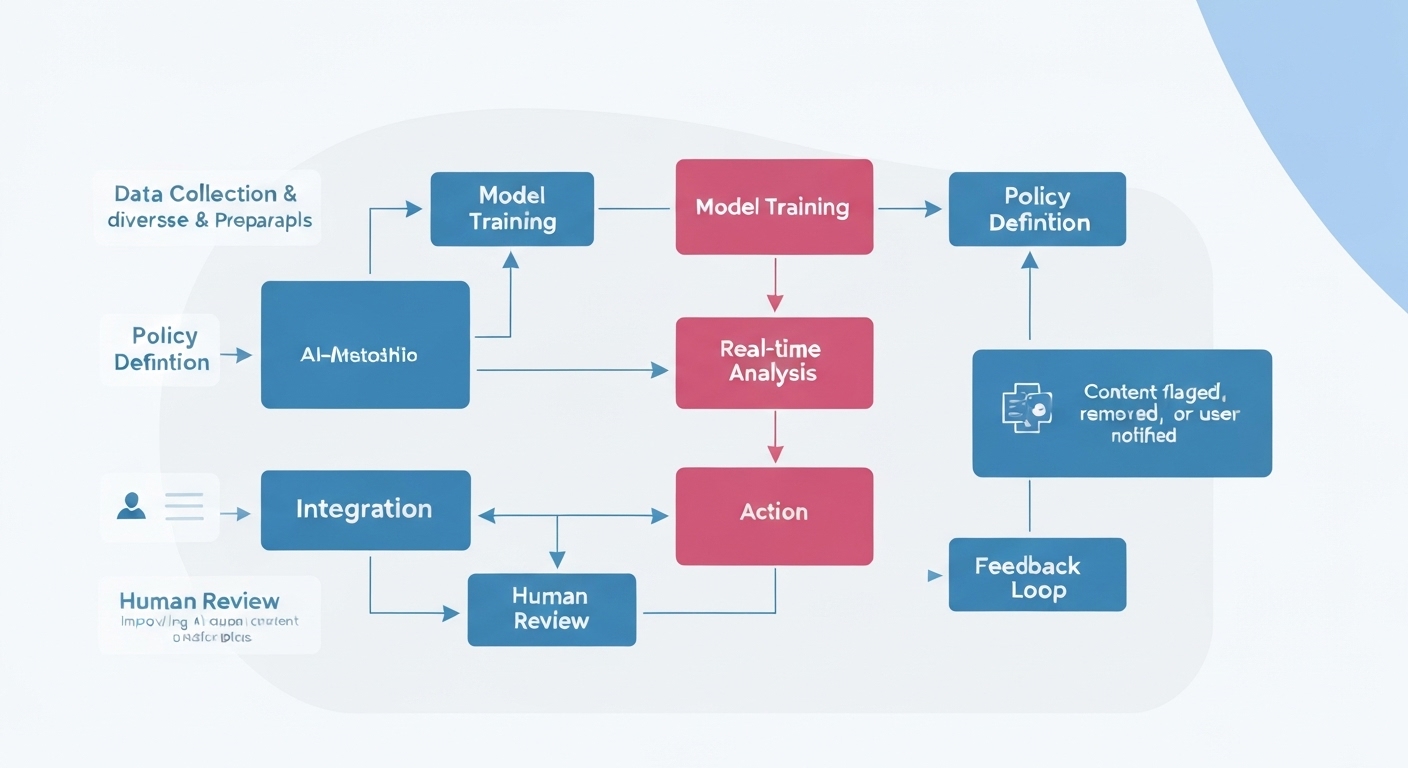

Implementing AI Content Moderation

Implementing AI content moderation involves several key steps, from selecting the right tools to training the AI models and establishing clear moderation policies. It’s crucial to tailor the AI system to your specific needs and community guidelines.

Steps for Effective Implementation

- Define Clear Moderation Policies: Establish clear and specific guidelines for acceptable content.

- Choose the Right AI Tools: Select tools that align with your platform’s needs and content types.

- Train the AI Models: Provide the AI with high-quality training data to improve its accuracy.

- Monitor and Refine: Continuously monitor the AI’s performance and make adjustments as needed.

- Human Oversight: Implement a system for human moderators to review and override AI decisions when necessary.

Careful planning and execution are essential for successful content moderation automation.

Challenges and Considerations in Automating Content Moderation

While AI offers significant benefits, it’s important to acknowledge the challenges and limitations of automating content moderation with AI. AI models can sometimes make mistakes, particularly when dealing with nuanced language, sarcasm, or cultural context. It is also important to consider bias in data sets. Addressing these challenges requires a thoughtful approach and ongoing monitoring.

Addressing the Challenges

- Bias Mitigation: Ensure that training data is diverse and representative to avoid biased outcomes.

- Contextual Understanding: Develop AI models that can understand the context of online conversations.

- Transparency and Explainability: Provide users with clear explanations of why content was flagged or removed.

- Continuous Improvement: Continuously update and refine AI models to improve their accuracy and adapt to evolving online trends.

It’s critical to have a human-in-the-loop approach to content moderation, where human moderators can review and override AI decisions when necessary. This ensures that complex or ambiguous cases are handled appropriately. Maintaining user trust requires transparency.

Future Trends in AI Content Moderation

The field of AI content moderation is constantly evolving, with new techniques and technologies emerging all the time. Future trends include the development of more sophisticated AI models that can understand context and nuance, as well as the use of AI to proactively identify and prevent harmful content before it’s even posted.

Emerging Trends

- Advanced NLP: Improved understanding of language nuances and context.

- Proactive Moderation: Identifying and preventing harmful content before it’s posted.

- Multimodal Analysis: Analyzing text, images, and videos together to gain a more comprehensive understanding of content.

- Federated Learning: Training AI models on decentralized data sources, protecting user privacy.

These advancements promise to make online platforms even safer and more welcoming for users.

The Role of Human Moderators

Even with the advancements in AI, human moderators remain an essential part of the content moderation process. They can provide context and understanding that AI models may lack, and they can handle complex or ambiguous cases that require human judgment.

The Importance of Collaboration

- Handling edge cases: Human moderators address situations where AI algorithms struggle with nuance or context.

- Providing empathy and understanding: Human moderators can offer support and understanding to users affected by content moderation decisions.

- Ensuring fairness and transparency: Human moderators can review AI decisions to ensure fairness and transparency in the content moderation process.

The best approach to content moderation involves a collaboration between AI and human moderators, leveraging the strengths of both to create a safer and more positive online experience. Learn more about content safety at DHS.gov.

Explore cutting-edge AI content moderation solutions at flashs.cloud.

Conclusion

Automating content moderation with AI is crucial for maintaining safe and engaging online environments. While challenges exist, the benefits of AI-powered moderation, including improved accuracy, reduced costs, and increased efficiency, are undeniable. By embracing AI and combining it with human oversight, online platforms can create a better experience for their users and foster more positive online communities. As AI technology continues to advance, we can expect even more sophisticated and effective solutions for content moderation in the future.

HOTLINE

+84372 005 899